Stupid Simple System Design

Table of Contents

I always see all the discussions about this tech and that one on Hacker News. About this new revolutionary data storage system, about how that company claims they saved X% on their bill by making a switch on their architecture.

But I felt like I lacked some knowledge to measure by myself the good and bad of each, instead of being convinced by every person that had a decent argument to justify any architectural or technological choice.

So, recently I have been reading the notorious book: Designing Data Intensive Applications

While reading it, I feel like there are some basics of designs systems that I overlooked, building blocks to be more precise. So here, I am going to list them and give the necessary information to understand them and why they exist.

DNS #

The Domain Name System (DNS) is essential to the functioning of the internet as we know. It is thanks to him that we can type in our browser a simple domain name, and we end up on the corresponding server.

It can be described as the “phone book” of the internet. Its primary purpose is to translate human-friendly domain names into machine-readable IP addresses. For instance, typing google.com will redirect to the IP 172.217.20.206.

When we input a domain name in our browser, the DNS is responsible for finding the corresponding IP address and redirecting our request toward its server. A request that is done through a DNS goes through a series of hierarchical queries that start with root servers and progressively narrow down to find the authoritative DNS server for the specific domain we are looking for.

Load balancer #

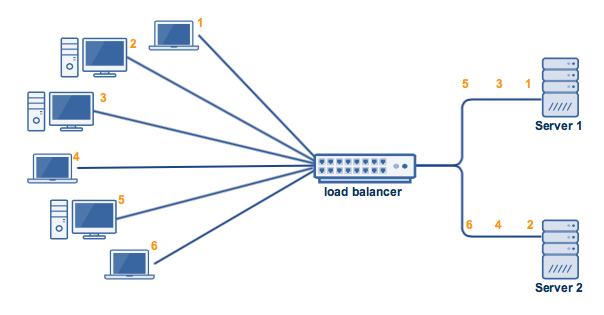

A load balancer is a component that evenly distributes incoming network traffic across multiple servers or resources.

We need to use load balancing when talking about a system at scale, to ensure high availability, improve performance, and prevent overloading of any single server. Essentially, our load balancer acts as a traffic cop, directing requests to the most suitable server in a pool, optimizing resource utilization, and minimizing downtime due to server failures.

A basic algorithm that could be used to balance the requests evenly between servers would be a round-robin.

But there are multiple solutions to balance the requests:

- A weighted round-robin, where the load balancer is aware of the capacity of the different nodes to maybe attribute more load where the capacity would be bigger.

- A least connection algorithm, taking into account the number of connections each server has to prevent connections from pilling up.

- A random algorithm

Another good practice could be to make your load balancer aware of metrics that are decisive for your use case. For instance, you may want to take into account the transfer usage of each server.

Messaging system #

Messaging systems are extremely useful, if not necessary, in most distributed application at scale.

They enable different services to exchange data efficiently at scale, by allowing them to send and receive messages or events asynchronously.

In essence, it serves as a messaging backbone for distributed systems, supporting the reliable and scalable exchange of information.

We can cite different popular technologies of messaging systems like Apache Kafka (distributed event store and streaming process) or RabbitMQ (message broker)

API Gateway #

The API Gateway is a system that serves as an intermediate between the clients and the servers. It is essential in the setting where the application is based on microservices as it offers a single entry point for the requests, for them to be then streamlined to the various services.

Its role can encompass:

- Routing the requests

- Authentication and Authorization

- Rate limiting and throttling

- Logging

- Caching the frequent queries

- Load balancing

- Transforming the queries if needed

- Circuit breaking

CDN #

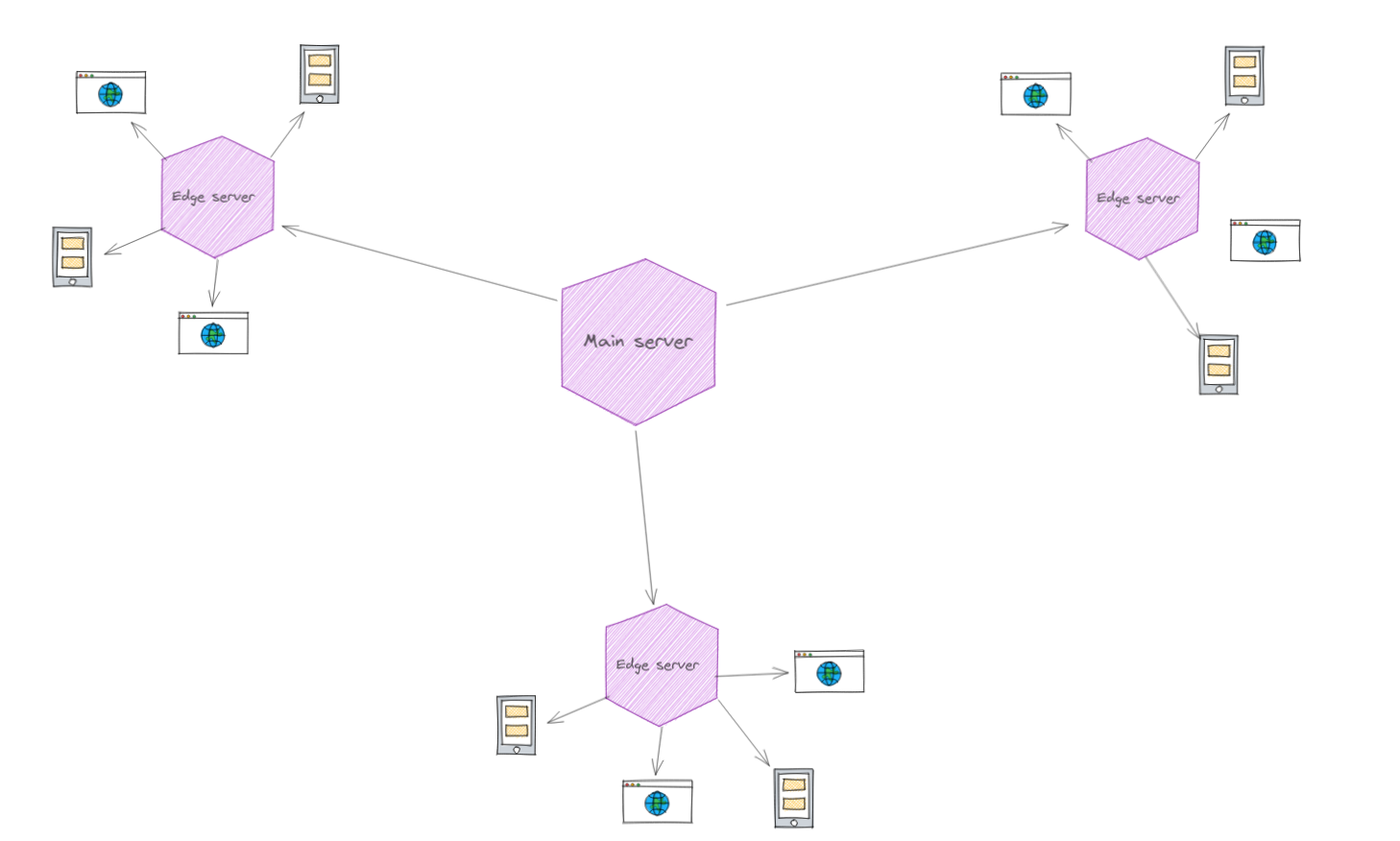

A CDN (Content Delivery Network) is simply a network of servers that are mean to deliver content from a location that is close to the user (i.e on the edge). Reducing the distance between the distance between the user and the server effectively reduce the time to retrieve the data.

Usually, a CDN deliver the heavier assets, such as web pages, images, videos, etc…

In a nutshell, the CDN have the following characteristics:

- Servers distribution through different regions of the world.

- Content replicated though all the edge servers.

- Geographical proximity to the users, through the different edge servers.

- Faster content delivery thanks to the reduced distance traveled by the data, reducing latency.

- Load balancing of the content delivery through the different edges.

- Caching of the content, reducing even furthermore the load time

Proxy #

A proxy is simply a piece of hardware or software, that sits between a client and a server.

Their usual usages are:

- Filtering requests

- Logging requests

- Transform requests (adding/removing headers, encrypting/decrypting, compression, etc…)

There are two important types of proxies to know:

Forward proxy #

A forward proxy, also known as a proxy server or simply a proxy, primarily serves as an intermediary between the clients devices and the web. It acts on behalf of the clients to request resources from web servers. It is often configured on the device client or network.

Reverse proxy #

A reverse proxy, also known as a web reverse proxy or application gateway, primarily serves as an intermediary between client requests from the internet and backend servers that host web applications or services. It operates on behalf of the web server.

Cache #

A cache is a storage that temporarily holds frequently accessed or recently used data.

Its purpose is to improve a system’s performances and response times by reducing the need to fetch data from slower and larger storage resources, such as databases or remote servers. For that, a cache will often be a piece of software that stores the data in memory.

Caching is key to improve the overall performance of systems where some actions are expensive, in terms of time taken, CPU usage, etc…

For instance, if we were to design a search system, caching the results of the most frequent queries, could reduce the extensive usage of CPU or the latency it causes.

Bonus: Personal take #

I wonder if many people had the same hard time as me grasping many concepts of application designing like me, and understanding how and why it is composed by these different building blocks.

But for me, these difficulties were mainly driven by the fact that, I was confused by the nature of each of these components.

I ended up being stuck about how is a such pieces of software was told to be the best to do X or Y. Wondering if I was missing information about the hardware or else.

In the end, just thinking about each of these building blocks like a simple server with a unique software, crafted for a special purpose, helped to abstract the underlying complexities.

Further reading #

To go further into system design, I highly advise anyone to check out these resources:

- [GitHub repo] Software design primer

- [GitHub repo] Grokking system design

- [Book] Designing data intensive applications

- [Newsletter] Quastor

- [Blog] Scale your app

- [Youtube Channel] Jordan has no life